About when I started saving the leaderboard elo scores, there should have been at least one matchmaking change:

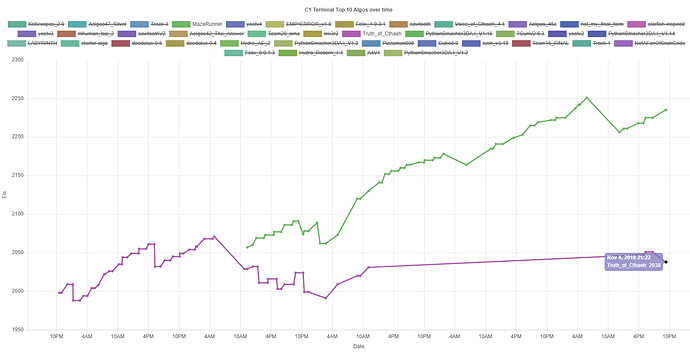

Now, look at the general elo development of the top algos since then:

As you can see, I very professionally marked the upper and lower boundaries of the top 10 and they are looking to steadily increase (somewhat linearly).

Looking at the development of the amount of matches played, we can see that there was no change in matchmaking speed (completely linear):

This should therefore most likely not be a cause because the highest elo was stable at around 2000 for a long time.

You need to know that the elo required to be in the top 10 was even lower before the database was in place.

Almost two weeks ago, I plotted the elo distribution at that time and below you can see that data compared to the current distribution (it is based on each users highest elo on the leaderboard):

(plot available here)

This shows that the elo did not only inflate in the top 10, but it did actually inflate overall.

There are almost no algos left below 500 elo -> what applies for the leaderboard also does for all other algos in this case.

Now, I am wondering about two situations:

-

How were the matchmaking changes able to increase all elo (are they the cause of this phenomenon)?

-

If so, what changes were applied for this to happen?