I believe I have ironed out the bugs in my pathing algorithm and can report some results and hopefully some helpful insight as well. I will report numbers for computing 5000 paths, similarly to @thephoenix107, but they should be taken with a huge grain of salt since they are timings from my laptop (which I know is much slower than the servers), and it’s not clear if our numbers are at all comparable since we are probably running quite different benchmarks. These are also numbers simply to compute paths, there is no combat involved.

In any case, here are some results:

Firstly, my algorithm takes about 750ms to compute 5000 paths when the map is empty.

For tests involving a changing map, I have run the following benchmark: Start with an empty map, then in a loop with 5000 iterations, add a firewall with probability p, and remove a firewall with probability 1 - p, then compute a path from a random starting location.

When I add firewalls wither higher probability (p ~= 0.65), the map gets denser and many paths are very short (average length of about 4), which leads to much faster timings. When firewalls are frequently removed (p ~= 0.51), the map remains sparse and so paths are long (avgerage length of about 26) and hence leads to longer timings. Further, average paths in this model are probably shorter than in a realistic game map since actual players obviously want to force their opponent’s units to move a long distance.

In any case, running this takes between 1.3s - 4.1s, depending on the aforementioned probabilities.

How does my algorithm work? I will try to describe…

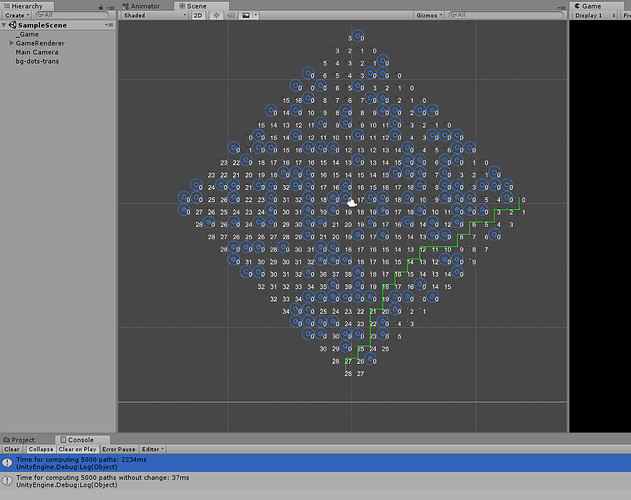

I at first thought some sort of A* variant would be the best way to approach this problem, but I actually had a hard time figuring out how to reuse information between runs when the map changes. Instead, my algorithm is based pretty much entirely on bread-first search “flood-filling”. The zig-zagging state is stored in a separate Unit class. I have 4 copies of the map depending on what the destination edge is, so suppose WLOG that the target edge is the top right.

I assigned each location a (cost, distance) pair, where cost is a tuple and is equal to cost = (-inf, -inf) if the top right edge is reachable from that location. If not, the cost is cost = (|y - 28|, |x - 28|) which encodes the rule for self-destruct seeking out the deepest and closest to the target edge square. The distance stores the number of tiles to reach the ideal location from the current square. This information is enough for a unit to infer from it’s current location where it needs to go next, no matter where it is on the board. And, for each location to know if there is a shorter path through a different neighbour, or if it can provide a shorter path to one if it’s neighbours – this is what the BFS traversals do.

It is then necessary to maintain this information whenever a firewall is added or removed. When a firewall is removed, you simply need to do a BFS from this location^(footnote 1). When a firewall is added, it is possible that the now occupied square is blocking the ideal paths for some routes, so, I decided to do an outwards “reset” of all the nodes emanating from that location whose ideal routes previously used the now blocked square, then re-do the floodfill from there, being careful about whether it is possible to reach the edge from these locations or not (the new firewall could have blocked off previous paths and created a new “pocket”).

Ultimately, it is possible to terminate each BFS after very few updates whenever a tower is changed, since only a small region of space if affected. However, my implementation is now such that changing a large number of towers at once requires doing these updates in sequence. I’m not whether this actually has a negative performance impact in practice or not.

(footnote 1): I had a pernicious edge case where the removed tower may now be in the location of the ideal end-point for it’s pocket.

.

.